The success of your AI model doesn’t start with algorithms – it starts with data. Good data. Relevant, diverse, structured, and abundant.

But finding the right data to train your model? That’s often the hardest part. Whether you’re building a computer vision system, a language model, or a fraud detection engine, your model is only as smart as the data it learns from. And no, scraping random rows off the internet won’t cut it anymore.

In this article, we dive into the most valuable and reliable places you can source data for your AI model training – from well-curated public datasets to unconventional goldmines you might not have thought of. Whether you’re bootstrapping a prototype or scaling a production-grade system, you’ll walk away with practical ideas and resource links you can act on immediately.

Why is it So Hard to Find Good AI Model Training Data?

Getting data isn’t the problem. Getting good data – that’s where the challenge begins.

Most AI projects run into bottlenecks early because real-world data is messy, restricted, and often not fit for model training out of the box. It’s incomplete, inconsistent, biased, or simply not representative of the actual use case. And when it is clean and relevant, it usually comes with legal strings attached.

Data privacy regulations like GDPR, HIPAA, and CCPA have rightfully raised the bar on how user data can be collected, stored, and used – but for AI builders, this means a long paper trail of permissions, anonymization, and compliance processes that delay development.

Then comes the ethical layer. Training models on data scraped from the web without user consent, using synthetic datasets that reinforce existing bias, or relying on surveillance data from public spaces – all raise valid concerns around fairness, accountability, and transparency. Without proper curation and oversight, your model can unintentionally cause harm or discrimination, even if it performs well in testing.

There’s also the domain-specific hurdle. Want to train an AI model for radiology? Financial forecasting? Legal research? You’ll need highly specialized datasets, often locked away behind paywalls, licenses, or institutional firewalls.

In short: finding the right datasets for AI training is hard because it’s not just a technical task. It’s a balancing act between quality, legality, and responsibility.

10 Ethical Places to Get Data for Training Your AI Model

Finding the right data is where most AI projects quietly begin to fail. It’s not just about having a lot of it – it’s about legality, structure, usability, and relevance. Data scraping and web harvesting may seem like shortcuts, but they’re riddled with copyright restrictions, unreliable formatting, and ethical red flags.

This list walks you through ten reliable, legal, and widely respected sources to help you acquire AI model training datasets – whether you’re building an NLP model, a medical AI, or a visual recognition system. Each one offers a different value – from annotation quality and scale, to niche domain specificity and pricing flexibility – so you can find the best fit for your project, not just the most convenient one.

1. Kaggle

Kaggle is one of the most active data communities online. The datasets for AI training section have evolved from simple CSVs to full-blown, multi-format repositories, often attached to research competitions or real-world business problems. You’ll find everything from climate records and retail inventory logs to MRI scans and satellite image tiles. What makes Kaggle special is the ecosystem – public notebooks, leaderboard models, and discussion threads give you a running start. If you’re experimenting, testing hypotheses, or even benchmarking production models, Kaggle gives you annotated, cleaned, and often real-user-generated data without legal ambiguity. It’s free, frequently updated, and community-vetted.

2. Hugging Face

If your work even brushes against natural language processing, Hugging Face is likely already in your toolkit. Beyond its famous Transformers library, the datasets hub is a goldmine of curated corpora for text, audio, and classification tasks. You’ll find Wikipedia dumps, speech emotion clips, Q&A datasets like SQuAD, translation sets like OPUS, and domain-specific corpora for healthcare, legal, and finance. The best part? Everything is directly compatible with their libraries – so the data you find can flow straight into your tokenizers and training scripts without manual cleanup. Hugging Face is one of the rare platforms where usability, quality, and community converge effortlessly.

3. Google Dataset Search

Think of it as Google Search, but filtered only for datasets. It doesn’t store the data, but points you to thousands of sources across government portals, academic labs, NGOs, and niche research projects. You might discover a study on neonatal heart rates from a Swedish hospital, or climate-impact sensor data from a university in Japan. The range is huge and international. It’s particularly useful for narrow domains where generalized data won’t help – like policy modeling, oceanography, or material science. That said, results vary in quality, format, and licensing, so you’ll need to vet each source carefully before integrating.

4. Open Images Dataset by Google

This is one of the most comprehensive computer vision datasets out there. With over 9 million images annotated with image-level labels, bounding boxes, segmentation masks, and object relationships, it’s a dream for CV engineers. It supports over 600 object classes and includes real-world diversity in scenes, angles, and lighting conditions. Whether you’re building an object detector, a scene classifier, or an assistive tech app, Open Images gives you enough visual diversity to go beyond toy examples and into real-world performance. It’s also one of the few free datasets for AI training that rivals proprietary training data in scale and depth.

5. AWS Open Data Registry

This is Amazon’s way of helping developers tap into large-scale datasets without worrying about storage or access friction. The registry, which is also one of the top platforms to collect AI training data hosts everything from satellite imagery and weather patterns to medical genomics and public transit data. It’s particularly strong for geospatial and time-series formats, often used in climate research or logistics optimization. You don’t need to download petabytes locally – most datasets can be pipelined directly into AWS services like S3 or SageMaker. If you’re already building in the AWS ecosystem, it can save you weeks of prep work and infrastructure cost.

6. Common Crawl

If you need large-scale web data for training a search engine, LLM, or summarization model, Common Crawl is one of the best public sources. It contains petabytes of web pages, extracted text, and metadata scraped every month since 2008. The structure can be messy and requires serious preprocessing – so it’s not for beginners – but it reflects the real texture of the web: broken pages, multilingual content, embedded spam. That makes it uniquely useful for models that need robustness and scale. Several commercial LLMs have used it as a core training dataset. It’s raw, legal, and openly licensed – but not plug-and-play.

7. UCI Machine Learning Repository

This one’s a classic – still widely used by students, researchers, and even experienced ML practitioners. It offers small to medium datasets in tidy formats like CSV or ARFF, ideal for regression, classification, and clustering tasks. From credit scoring data and handwritten digits to wine quality and breast cancer diagnosis, UCI’s focus has always been clarity and reproducibility. If you’re building models that prioritize explainability or tabular accuracy and searching for data to train my AI model, this repository gives you clean baselines and interpretable features. It may not support deep learning scale, but it’s unbeatable for clean prototypes and performance tuning.

8. Data.gov (US Government)

Data.gov is one of the most underrated resources – it provides access to over 250,000 datasets from dozens of US federal agencies. You’ll find economic reports, transportation schedules, environmental sensor logs, and social welfare stats. Everything here is public domain, and many are updated regularly. It’s especially valuable if you’re building civic-tech, policy modeling, fintech, or tools that interact with public infrastructure. The challenge? Format inconsistencies and API limitations – but if you have the data wrangling skill, there’s incredible insight waiting to be unlocked.

9. PhysioNet

When you’re working in the medical AI space, most datasets are either proprietary or locked behind IRB approvals. PhysioNet stands out as an open repository that offers ECG signals, EEGs, ICU records, and even wearable biosensor logs. It’s maintained by MIT and partners, and the data is deeply annotated with clinical relevance in mind. Many datasets require credentialed access, but it’s free and entirely legal. Perfect if you’re building diagnostic tools, early-warning systems, or patient monitoring AI.

10. Academic Torrents

Created to support reproducible research, Academic Torrents is a peer-to-peer platform where universities and labs share massive datasets. What makes it the go-to answer for where to find data for AI training is that you’ll find raw brain scans, drone surveillance data, social media graphs, and large text corpora. Because it’s P2P, downloads can be fast and decentralized, especially for bulk data. It’s a little old-school in interface, but treasure-hunters will find unique datasets not available anywhere else. Just be cautious – while most of it is legal for academic or research use, always check individual licenses before using in production.

How to Generate Synthetic Data for Your AI Model

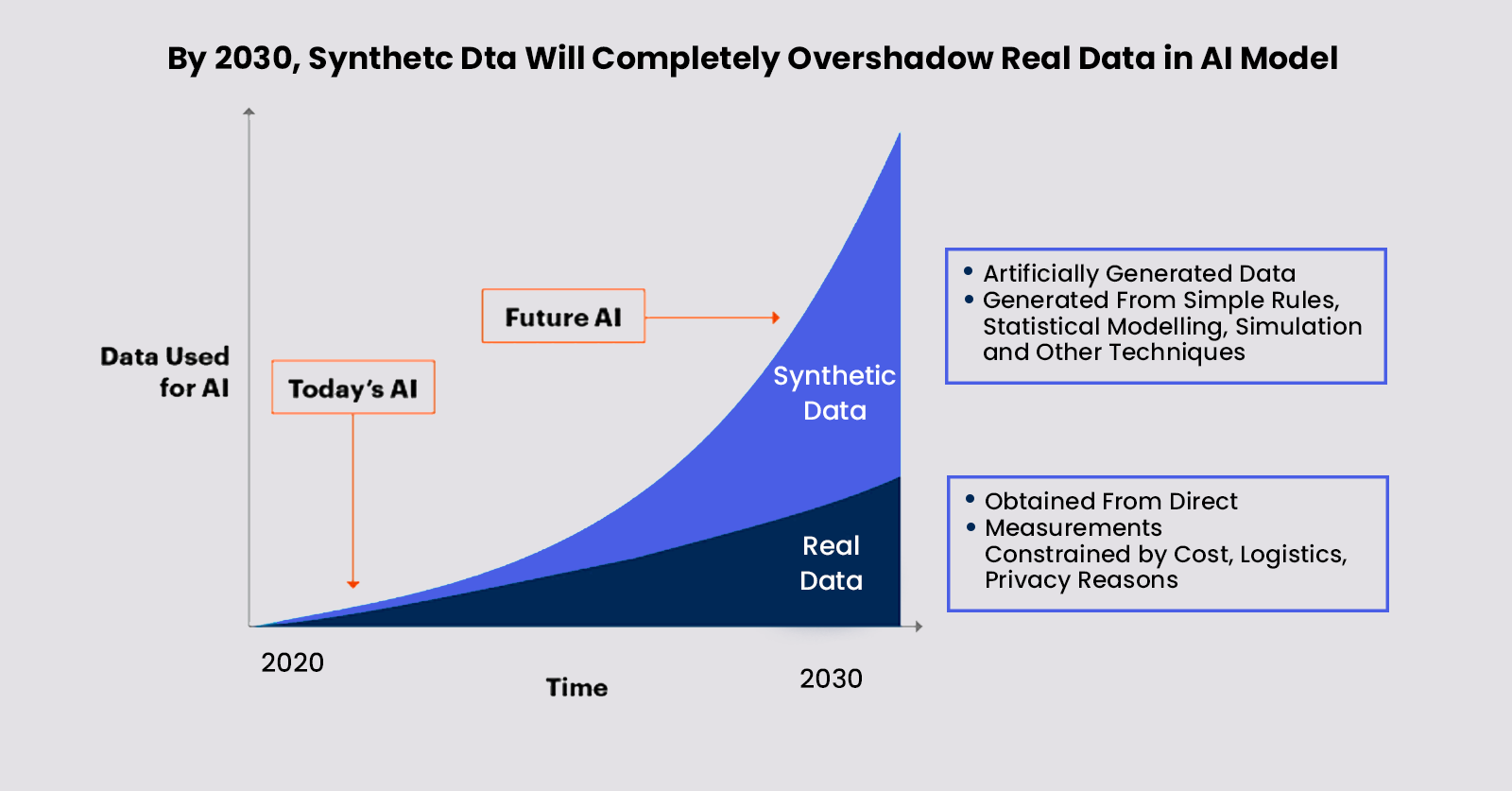

When high-quality, real-world data is hard to find or comes with legal and ethical strings attached, synthetic data generation emerges as a powerful solution for AI model training. Synthetic data is artificially created rather than collected from real events, and it can mimic real data’s statistical properties while protecting privacy and enabling scalable training. Here’s how you can generate synthetic datasets effectively:

- Rule-Based Simulation

One of the simplest methods to generate synthetic data is by writing explicit rules and formulas that mimic real-world processes. For example, if you’re building an AI to detect fraud, you might simulate user transactions with specific patterns-randomly injecting anomalies to represent fraudulent behavior. This data to train my AI model approach works well when the domain and relationships are well understood and relatively simple. It lets you control every aspect, but the risk is that it can oversimplify reality and limit model generalization. - Generative Adversarial Networks

GANs have revolutionized synthetic data creation, especially in images, audio, and even tabular data. A GAN consists of two neural networks-a generator and a discriminator-that compete to improve the realism of generated data. Over time, the generator produces outputs indistinguishable from real data. This method to train an AI model is perfect for expanding datasets where real samples are limited, like medical images or rare event logs. However, training GANs requires significant compute power and expertise, and there’s always the challenge of ensuring generated data doesn’t inadvertently reproduce sensitive original data. - Variational Autoencoders (VAEs)

VAEs are another neural-network-based method for generating synthetic data. They encode real data into a compressed latent space and then decode from this space to create new data points with similar characteristics. VAEs often produce smoother and more diverse samples than GANs and can be easier to train. They’re widely used for image and speech data synthesis and can also generate tabular data. A key benefit is that VAEs offer some control over the data generation process through their latent variables. - Data Augmentation

Although not purely synthetic data generation, augmentation techniques create new training samples by modifying existing data. In image recognition, this might mean rotating, flipping, or changing brightness. In text data, it could involve synonym replacement, paraphrasing, or back-translation. Augmentation is a quick way to expand datasets and improve model robustness without requiring complex generative models. The downside is that augmented data is always derived from existing data and may not introduce entirely new variations. - Agent-Based Modeling and Simulation

This technique involves creating virtual “agents” with defined behaviors that interact within a simulated environment. It’s particularly useful in fields like healthcare (simulating patient flows), finance (modeling market behaviors), and urban planning (traffic simulations). By running multiple simulations, you can generate diverse datasets representing various scenarios and outcomes. The realism depends heavily on the quality of the underlying behavioral models and assumptions. - Procedural Data Generation

Used often in gaming and computer graphics, procedural generation applies algorithmic rules to create large, complex datasets from simple input parameters. For example, a virtual hospital environment can be procedurally generated with different room layouts, equipment, and patient types, creating varied data to train an AI model systems in medical robotics or diagnostics. It requires domain expertise to design realistic procedural rules but can produce massive, richly annotated datasets efficiently. - Synthetic Tabular Data Generators

For tabular data-common in business analytics, finance, and healthcare-specialized tools like CTGAN (Conditional Tabular GAN) and Synthpop use machine learning to generate synthetic tables that preserve statistical properties and relationships between columns. These tools are invaluable when privacy regulations restrict sharing original data. Synthetic tabular data allows testing and training without risking exposure of sensitive information.

Generating synthetic data can bridge critical gaps, accelerate AI development, and keep you compliant with data privacy laws. However, it’s essential to validate synthetic data rigorously to ensure your model learns meaningful patterns rather than artifacts. Combining synthetic and real-world data often yields the best results, balancing realism and volume.

What to Look Out For When Using Synthetic Data to Train Your AI Model

Synthetic data can be a game-changer for AI development, but it comes with its own set of challenges that need careful consideration to avoid compromising your model’s performance and reliability. Here are key aspects to watch for:

- Data Quality and Realism

Synthetic data must closely mimic the statistical properties and patterns of real-world data to be effective. Poorly generated synthetic data can introduce unrealistic or biased patterns, leading the model to learn false correlations. Always perform thorough statistical analysis comparing synthetic and real datasets, checking distributions, correlations, and outliers to ensure fidelity. - Overfitting to Synthetic Artifacts

Because synthetic data is generated through models or rules, it may contain artifacts or repetitive patterns not present in real data. If your AI overfits to these artifacts, it may fail to generalize when exposed to real-world inputs. To mitigate this, blend synthetic data with genuine data where possible and use regularization techniques during model training. - Privacy Risks from Synthetic Data

One of the advantages of synthetic data is privacy protection, but improper generation methods can inadvertently leak sensitive information, especially if the synthetic data is too similar to original data points. It’s crucial to apply privacy-preserving techniques such as differential privacy or membership inference attacks testing to validate that synthetic data doesn’t compromise confidentiality. - Domain and Context Appropriateness

Synthetic data generation methods must be carefully tailored to your specific domain. Generic synthetic data that ignores the underlying context can mislead your AI model. For example, in healthcare, patient vital signs should follow medically plausible ranges and dependencies. Collaborate with domain experts to ensure synthetic data respects real-world constraints and nuances. - Scalability and Computational Resources

Generating high-quality synthetic data, especially with techniques like GANs or agent-based simulations, can be computationally intensive and time-consuming. Plan for adequate infrastructure and optimize generation pipelines to avoid bottlenecks in your AI development lifecycle. - Validation and Testing

Always validate your AI model not just on synthetic data but also on separate real-world test sets. This ensures that performance metrics reflect practical applicability. Additionally, perform sensitivity analyses to detect if the model relies disproportionately on synthetic data features. - Ethical Considerations

While synthetic data sidesteps some privacy issues, ethical concerns remain-such as reinforcing biases present in original data or creating misleading data that could affect decision-making. Regularly audit your datasets and model outcomes for fairness and bias, and maintain transparency about the data sources used. - Version Control and Reproducibility

Keep track of synthetic data generation parameters, random seeds, and versions. This helps in reproducing experiments and debugging any model issues that arise due to changes in synthetic data.

In summary, synthetic data is a powerful tool but requires rigorous checks and balanced use alongside real data. Attention to these factors helps ensure that your train an AI model journey gets backed by meaningful, robust patterns that perform well when deployed in real-world scenarios.

How We Approach Data Gathering at CodingworkX

At CodingworkX, we understand that great AI models are only as good as the data they’re trained on. That’s why data gathering isn’t just a step in our development process-it’s a foundational pillar. We begin by working closely with our clients to understand their domain, use case, and data availability. This helps us evaluate whether to leverage existing data, source it externally, or generate synthetic datasets. We prioritize legally and ethically sourced data, using licensed datasets or public repositories only when they meet compliance standards and business relevance.

If real-world data is limited, we build synthetic datasets using techniques like GANs, simulators, or rule-based engines, ensuring statistical fidelity to real-world behavior. But we never rely on synthetic data in isolation-we validate and tune models against real data wherever possible.

We also take data hygiene seriously. Our pipelines include steps for anonymization, bias mitigation, and noise reduction. We integrate feedback loops from models in production to refine the datasets over time-so performance gets better with usage.

For clients in sensitive sectors like healthcare, finance, or government, we align our data handling with applicable regulations (GDPR, HIPAA, etc.) and offer secure data collaboration environments to ensure privacy and control. If you’re looking for a partner who can bring both technical precision and ethical integrity to your AI data strategy, let’s talk.

FAQs.

Q. Where can I find data to train my AI model?

Ans. You can find training data from open-source repositories, public datasets, government data portals, internal logs, and third-party data providers. Sources like Kaggle, UCI Machine Learning Repository, Hugging Face Datasets, and Google Dataset Search are popular starting points. For enterprise-grade data, companies often use data aggregators or partner with firms that specialize in custom data sourcing and labeling.

Q. What are the best free datasets for AI training?

Ans. Some of the best free datasets include:

- ImageNet for computer vision

- Common Crawl for NLP and web-scale applications

- COCO for object detection

- OpenStreetMap for geospatial models

- MIMIC-III for healthcare AI

- LibriSpeech for speech recognition

These datasets are well-documented and widely used across research and production-grade projects.

Q. Which platforms offer high-quality AI training data?

Ans. Several platforms provide curated, labeled, and scalable AI training datasets:

- Scale AI – high-quality annotations and synthetic data generation

- AWS Data Exchange – access to licensed datasets across industries

- Snorkel AI – weak supervision and programmatic labeling

- Lionbridge AI and Appen – for human-labeled data at scale

- Hugging Face – open-source NLP and multimodal datasets with community support

Q. What are the legal considerations when using public data for AI?

Ans. Legal considerations include copyright, licensing, and privacy compliance. Not all public datasets are free to use commercially, and some may have restrictive licenses (e.g., non-commercial use only). When using personally identifiable or sensitive data, you must also comply with regulations like GDPR, CCPA, and HIPAA. It’s critical to vet datasets and ensure that your use case aligns with both ethical and legal standards.

Q. What types of data formats are commonly used in AI training?

Ans. Common data formats include:

- CSV, JSON, XML – for structured data

- JPEG, PNG, TIFF – for images

- WAV, MP3, FLAC – for audio

- TXT, PDF, DOCX – for natural language/text

- Parquet, Avro – for big data pipelines

The choice of format often depends on the data type, model architecture, and scale of the project.

Q. Why choose CodingWorkX to help source and manage your AI training data?

Ans. At CodingWorkX, we go beyond just sourcing data – we help you define what quality looks like for your model. Whether you need raw data, synthetic augmentation, labeling pipelines, or data compliance strategy, we bring deep AI expertise and domain alignment. Our teams ensure your training data is clean, diverse, compliant, and model-ready, so you can focus on building, not wrangling.