AI smart glasses are no longer the stuff of sci-fi – they’re quickly becoming the next frontier in immersive, intelligent computing. From real-time translations and facial recognition to hands-free navigation and AR overlays, these glasses are reshaping how users interact with digital content in the physical world.

But while the hardware continues to evolve, the real magic lies in the software. Building an app for AI smart glasses isn’t just about porting a mobile experience to a new screen – it’s about rethinking how people engage with data when their hands are free, eyes are active, and context is everything.

Whether you’re creating tools for industrial fieldwork, smart retail, healthcare, or everyday productivity, developing for this platform comes with a unique blend of constraints and opportunities. In this guide, we’ll walk through what it takes to design, develop, and launch an AI-powered app tailored for smart glasses – so you’re not just part of the trend, you’re helping shape it.

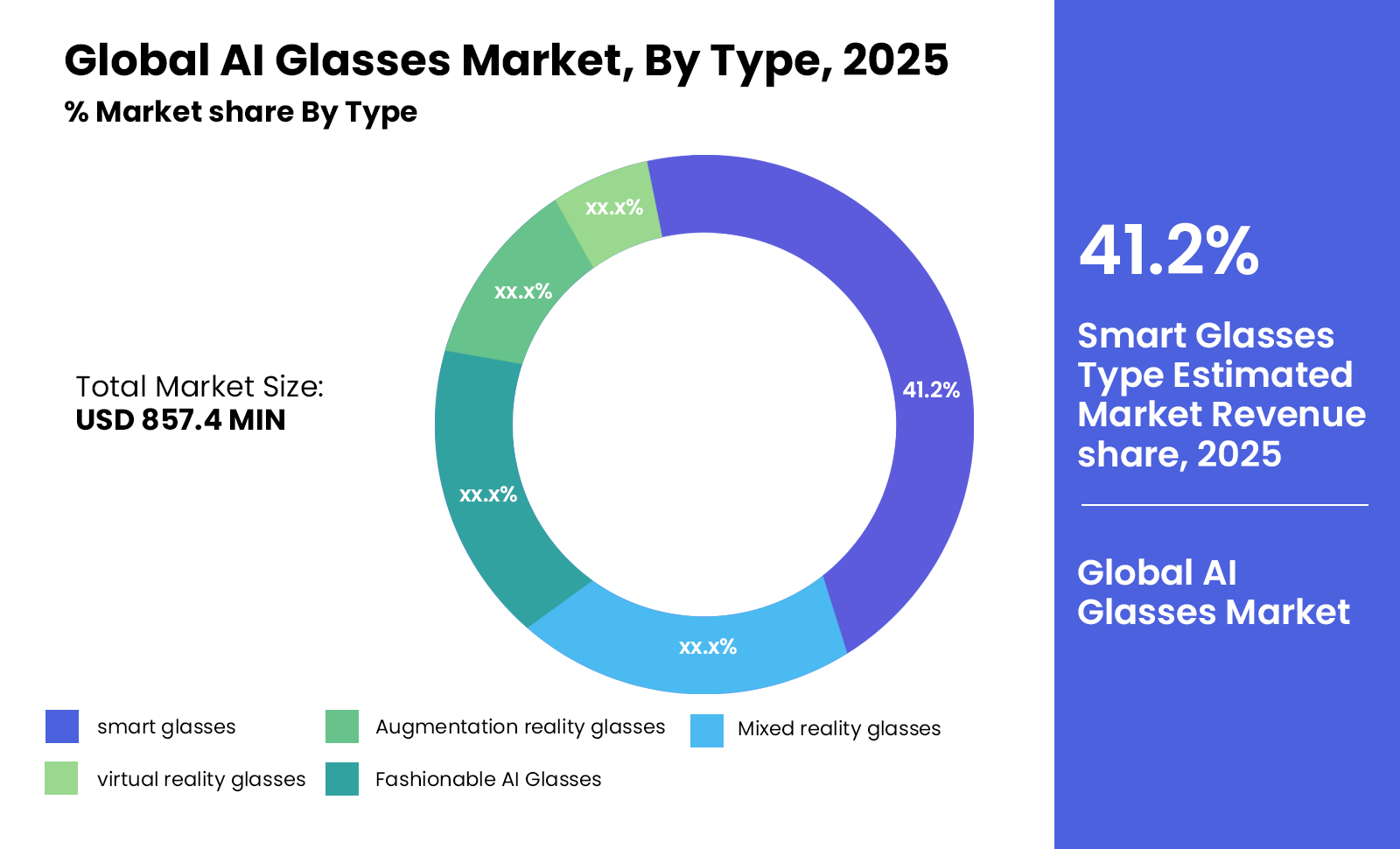

The AI Smart Glasses Market

AI smart glasses are shifting from futuristic novelty to a viable computing platform – fast. In 2024 alone, the market saw over 1.52 million units sold globally, with more than 30 new AI smart glass models released in just one year, according to a recent GlobeNewswire report. By 2030, that number is projected to jump to 90 million units, highlighting a steep adoption curve driven by improvements in hardware, AI capabilities, and user interest.

On the valuation front, estimates place the current global smart glasses market between $878 million and $1.3 billion in 2024. Growth forecasts vary, but multiple sources – including MarketsandMarkets – predict a rise to $3–4 billion by 2030, with a CAGR ranging from 11% to nearly 30%, depending on whether the focus is on AI-native devices or broader smart eyewear categories.

Consumer-facing products are helping drive the buzz. Meta’s second-generation Ray-Ban Meta smart glasses reportedly sold between 1–2 million units across Europe, the Middle East, and Africa by the end of 2024, with models priced competitively around $300. Meanwhile, Xiaomi, Snap, and Apple are all positioning themselves to compete, with lighter frames, improved voice AI, and better battery life – some stretching to 8.5 hours on a single charge, as highlighted by Android Central.

Enterprise adoption is also accelerating. From warehouses and field service teams to surgical suites and remote training, companies are seeing value in hands-free AR and AI-assisted overlays. According to Wired, 2025 is shaping up to be the year smart glasses shift from fringe experimentation to mainstream integration – both in business and consumer markets.

In short, AI smart glasses aren’t just another device – they’re becoming a new interface layer for the internet. And the opportunity to build apps that shape how users experience this layer is unfolding right now.

What Changes When You Build for AI Smart Glasses Instead of Phones

Building for AI smart glasses isn’t a matter of shrinking down a mobile app – it’s about rethinking how users interact with technology altogether. The shift from handheld devices to heads-up, hands-free experiences changes everything: UI, UX, input methods, and even what “useful” means.

Here’s what sets smart glasses app development apart:

Context is king

AI glasses are deeply situational. Unlike phones, which users actively engage with, smart glasses are passively aware – constantly interpreting the environment to surface relevant information. Your app isn’t just responding to taps and clicks – it’s predicting needs based on location, time, objects in view, and voice cues.

The interface disappears

Forget buttons, dropdowns, and swipe gestures. With smart glasses, visual real estate is limited, and the UI often needs to be translucent, minimal, and contextually triggered. You’re designing for glances, not scrolls. The best smart glasses apps feel almost invisible – there when needed, gone when not.

Voice and gesture replace touch

Since users won’t be holding the device, traditional tap-based navigation is off the table. That means apps need to respond reliably to voice commands, head movement, and sometimes even hand gestures. This introduces new accessibility challenges – and opportunities for more intuitive interaction.

Movement changes everything

Unlike phones, which users often engage with while stationary, smart glasses are typically used on the move – while walking, working, or multitasking. That puts constraints on cognitive load, notification timing, and interaction complexity. Simplicity becomes not just good UX, but critical for safety and usability.

Real-world integration is mandatory

Smart glasses don’t just overlay data – they merge it with the physical world. That means your app may need to work with object recognition, real-time language processing, or spatial mapping. Integrations with AI models, sensors, and AR frameworks aren’t add-ons – they’re core functionality.

In short, developing for AI smart glasses requires more than responsive layouts or cross-platform builds. It demands a shift in product thinking, where the app is not a place the user goes to – it’s an assistant that meets them where they are.

Core Features to Consider in a Smart Glasses App

When you’re building for AI smart glasses, every feature has to justify its presence. You’re designing for moments, not sessions – for real-world tasks, not screen time. The right feature set depends on your use case, but across industries, a few core components tend to form the foundation of most successful apps.

Here’s what to consider integrating:

1. Voice Command and Natural Language Processing

Voice is often the primary input for smart glasses. Your app should be able to interpret natural speech, handle variations in phrasing, and respond quickly – even in noisy environments. Integrating tools like Whisper, Google Speech-to-Text, or domain-specific NLP models will be key.

2. Real-Time Object Recognition or Scene Detection

If your app needs to identify physical objects, locations, or people, computer vision is essential. Use on-device models or cloud-based APIs to detect what’s in front of the user – and then trigger actions, overlays, or insights accordingly.

3. Augmented Reality (AR) Overlays

While not all smart glasses support full AR, many offer basic spatial projection. Overlaying contextual information – like step-by-step instructions, product specs, or translated text – can radically improve the user experience without distracting from the real world.

4. Hands-Free Notifications and Alerts

Smart glasses should deliver non-intrusive, glanceable notifications. Whether it’s a status update, instruction, or warning, alerts need to be minimal, timely, and context-sensitive. No one wants popups blocking their field of view.

5. Edge AI Capabilities

Many smart glasses run lightweight AI models locally, enabling features like real-time transcription, gesture recognition, or facial detection without relying on cloud latency. Designing your app to work offline or with low connectivity can be a game-changer – especially in field or enterprise settings.

6. Cloud Sync + Companion App

While the core experience happens on the glasses, most products need a mobile or desktop companion app for managing settings, syncing data, or accessing more detailed dashboards. This hybrid model lets you keep the smart glasses UI lean while offering power users deeper control elsewhere.

7. Privacy, Permissions & User Control

Recording, scanning, or analyzing real-world environments raises legitimate privacy concerns. Your app should make it easy for users to control what’s being captured, when, and where the data is stored – especially if facial recognition or live video processing is involved.

Ultimately, your feature choices should serve one purpose: amplify the user’s awareness, not distract from it. Every second of delay, every visual clutter, and every confusing interaction breaks immersion. Building for smart glasses means building light, fast, and purposefully.

Tech Stack for AI Smart Glasses App Development

Building an app for AI smart glasses isn’t just about choosing the latest frameworks – it’s about aligning your stack with real-time demands, limited screen space, edge processing, and hardware constraints. Whether you’re building for consumer or enterprise use, your tech stack needs to support hands-free interaction, low latency, and high contextual intelligence.

Here’s a breakdown of what your stack might include:

Platform & OS Support

- Android-based SDKs: Many AI smart glasses (e.g., Vuzix, Nreal, Lenovo ThinkReality) run on Android or Android forks. This makes Java/Kotlin and Android Studio common choices.

- Custom SDKs: Ray-Ban Meta, Magic Leap, and others offer proprietary SDKs that require specialized dev environments.

Tip: Always check the manufacturer’s developer documentation for hardware-level capabilities, supported inputs (voice, gesture, eye tracking), and sensor APIs.

Voice Interaction & NLP

- Google Speech-to-Text or Whisper (OpenAI) for real-time voice input

- Dialogflow, Rasa, or Amazon Lex for conversational flows

- Edge-based NLP for low-latency responses in no/low-connectivity environments

AI & Machine Learning Models

- TensorFlow Lite or ONNX for running small, optimized models on-device

- YOLOv5 / YOLO-NAS or MediaPipe for object, gesture, or face detection

- OpenCV for image processing, overlays, and visual tracking

AR/Spatial APIs

- ARCore (for compatible Android-based glasses)

- Unity3D with MRTK (Mixed Reality Toolkit) for AR overlays, gestures, and spatial mapping

- 8thWall or Vuforia for web-based or hybrid AR implementations

Backend & Sync

- Firebase or AWS Amplify for real-time sync, notifications, and data handling

- GraphQL or REST APIs for communication between the glasses and companion apps

- SQLite or Room for offline-first functionality

Companion App Stack

- React Native, Flutter, or Swift/Kotlin depending on your target device

- Used to manage device settings, access dashboards, or store usage logs

Security & Privacy

- End-to-end encryption for sensitive data

- On-device processing to reduce unnecessary cloud transmission

- Role-based access controls, especially in enterprise or healthcare apps

Choosing the right tech stack for AI smart glasses is less about what’s trendy – and more about what’s lightweight, responsive, and context-aware. You’re not building for a supercomputer in someone’s pocket – you’re building for the smallest, smartest device in their line of sight.

Challenges You’ll Face When Building for Smart Glasses (and How to Solve Them)

Building for AI smart glasses is exciting – but also full of firsts. You’re working with a platform that’s still evolving, where best practices aren’t always well-documented, and user expectations are still forming. That means developers and founders alike face a steep learning curve.

Here are some of the biggest challenges – and how to navigate them:

1. Hardware Limitations

Most smart glasses have less processing power, memory, and battery life compared to smartphones. Heavy AI models or complex UIs can cause lag, overheating, or short battery spans.

How to solve it:

Use lightweight, optimized models (e.g., TensorFlow Lite) and offload heavy lifting to the cloud when possible. Build UIs that are minimal and motion-light to conserve power.

2. Unfamiliar UX Paradigms

Designing for a heads-up display is completely different from a phone. Users are in motion, often multitasking, and can’t afford to focus too long on small floating text or buttons.

How to solve it:

Design for glanceability. Use voice, sound cues, and contextual triggers to replace or supplement visual UI. Test in real-world conditions, not just on emulators.

3. Unstable or Proprietary SDKs

Some smart glasses SDKs are still maturing. You may face limited documentation, fragmented OS support, or inconsistent sensor access.

How to solve it:

Start with well-supported platforms (like Vuzix, Magic Leap, or Meta) and join their developer communities. Expect some custom device handling and write modular code for portability.

4. Voice Recognition Reliability

Voice is a core input – but ambient noise, accents, or connectivity issues can break the experience.

How to solve it:

Use on-device NLP fallback, add error-handling for missed commands, and train voice models with relevant vocabulary. Always allow for simple, interruptible flows.

5. Privacy and Data Ethics

Because the device sees and hears everything around the user, smart glasses raise immediate concerns around privacy, surveillance, and consent – especially in public spaces or B2B environments.

How to solve it:

Include clear visual indicators when cameras or mics are active. Build opt-in permissions. Offer local-only processing for sensitive data (like facial recognition) where possible.

6. Testing in Real-World Environments

Simulators can’t replicate walking through a warehouse, operating machinery, or giving a presentation. Bugs only show up when the device is worn in context.

How to solve it:

Invest time in field testing. Gather feedback from real users doing real tasks – then iterate fast. What looks great on your desk might fall apart in real-world use.

Building for smart glasses isn’t just technically different – it’s experientially different. But if you design with these challenges in mind from the start, your app won’t just work – it’ll feel natural, intuitive, and purpose-built for the future of computing.

How CodingWorkX Can Help

At CodingWorkX, we don’t just build apps – we help pioneer new product experiences on emerging platforms. Smart glasses are reshaping how humans interact with software, and we’re right at the edge of that shift, helping founders, product teams, and innovators bring bold ideas to life through lean, future-ready development.

Whether you’re building a voice-first productivity tool, a hands-free retail assistant, or an enterprise-grade AR overlay app, our team brings the right mix of AI expertise, UX thinking, and hardware-level awareness to make it happen.

We know this space is new – and that’s exactly where we do our best work. From designing contextual flows to choosing the right edge-friendly models, from integrating custom SDKs to creating mobile companion apps – we build with constraints in mind and scalability in sight.

If you’re looking for a partner who understands that developing for AI smart glasses isn’t just a porting exercise – it’s a reinvention – CodingWorkX is ready to help you lead, not follow.

FAQs

Q. How do AI smart glasses work with mobile apps?

Most AI smart glasses connect with companion mobile apps via Bluetooth or Wi-Fi. The mobile app handles user settings, data syncing, deep analytics, and often heavier processing that the glasses can’t handle due to hardware limitations. Some models also allow live streaming, voice command configuration, and device management through the app. This hybrid architecture lets the smart glasses stay lightweight and efficient, while offering users richer control through their phones. At CodingWorkX, we design seamless multi-device experiences to ensure both the glasses and mobile app work together effortlessly.

Q. What tech stack is best for smart glasses apps?

The ideal tech stack depends on your target hardware and use case, but common choices include:

- Frontend: Unity, React Native (with AR add-ons), or native SDKs (Android SDK, Vuzix SDK, etc.)

- Backend: Node.js, Python, or Go for API development

- AI/ML: TensorFlow Lite, ONNX, Whisper, or OpenCV for edge inference

- Cloud: AWS, GCP, or Azure for compute-intensive tasks and sync

- Extras: WebRTC (for live video), Firebase, MQTT (for IoT), and custom NLP engines

We help you choose and implement the right stack based on performance, battery life, and real-world context.

Q. Which smart glasses support third-party apps?

Several leading smart glasses offer third-party app support. Notable examples include:

- Meta Ray-Ban Smart Glasses – SDK still limited but growing.

- Vuzix Blade & Shield Series – Strong Android-based SDKs.

- Magic Leap – Built for spatial computing with Unity integration.

- Rokid, Xreal (formerly Nreal) – Open to developer ecosystems.

- Google Glass Enterprise Edition 2 – Still supported in some sectors.

CodingWorkX can help you evaluate hardware platforms, navigate SDK documentation, and build apps that are compatible and future-ready.

Q. How much does it cost to build a smart glasses app?

Smart glasses app development costs can range from $25,000 to over $150,000, depending on factors like:

- Use of AR, object recognition, or AI

- Real-time voice/NLP integration

- Cross-platform support (mobile companion + glasses)

- Backend infrastructure and cloud usage

- Testing in real-world environments

Enterprise apps with field-use cases and AI features tend to fall on the higher end. We offer phased MVP development at CodingWorkX to help you start lean and scale with clarity.

Q. What is the best use case for smart glasses apps?

Smart glasses excel in hands-free, on-the-move environments where quick, context-aware interactions are critical. Top use cases include:

- Field service support and maintenance

- Warehouse inventory picking

- Remote medical assistance

- Real-time translation and transcription

- Navigation and tourism

- Onboarding/training with visual instructions

The best use case is one where removing the phone improves efficiency, safety, or speed. We help companies identify and prototype the most viable applications based on their workflows.

Why choose CodingWorkX for wearable app development?

At CodingWorkX, we don’t just adapt mobile apps for smart glasses—we reimagine them for heads-up, hands-free, real-world use. Our team brings together expertise in AI, edge computing, AR frameworks, and real-world UX testing to build apps that are responsive, reliable, and genuinely useful. Whether you’re building for industrial environments, enterprise use, or consumer markets, we bring deep technical skills and product thinking to every stage of development. Let’s build the future of wearable computing—together.